Ask the Experts

Polling the pollsters

A guide to understanding political polls.

GOP gubernatorial candidate Doug Mastriano’s campaign signs are seen during a rally in May. Michael M. Santiago/Getty Images

Public polls. Internal polls. Even prank polls. With Pennsylavania set to elect a new governor and U.S. senator this November, there’s so much polling data floating around the political universe and it can be hard to make sense of it all. In an effort to pull back the curtain on the mechanisms behind political polling, City & State talked with three Pennsylvania-based pollsters to get their input on how to make sense of the barrage of political polls that have flooded the commonwealth this year. What follows is insight from Berwood Yost, director of the Center for Opinion Research and the director of the Floyd Institute for Public Policy at Franklin & Marshall College, Jim Lee, president, CEO and founder of Susquehanna Polling & Research, and Christopher Borick, director of the Muhlenberg College Institute of Public Opinion.

These conversations have been edited and condensed for length and clarity.

What can an accurate poll tell us about a political race or issue?

Berwood Yost: When we start talking about accuracy, we’re talking about something that's really hard to define. When you think about any poll, it's always the case that every poll has some degree of error associated with using a sample to represent some larger group of people. So, that affects your precision, which some people might call accuracy. The basic idea is that for any survey, anything you generate is an approximation to some result, and sometimes you can check those results and sometimes you can’t. I think the first thing that every reader should understand is that polls are approximations. There’s always error and we should expect every poll to have some amount of variability. They’re not precise. That's the starting point. For some items, it does not matter if you’re off a couple of points.

Let's just use the topic of the day: abortion. If that number is, say, two-thirds or more support abortion rights in many circumstances – doesn't really matter if it's 60% or 70% – you're in the ballpark and that's close enough to give you a sense of where people stand on that issue. So that's what polls can do. They give you an approximation of where things stand given who was asked the question and how the question was asked.

Jim Lee: I think the question is almost flawed in the sense that the word accuracy is kind of a social construct in polling. Who’s to say what’s accurate and what’s not? I’ve seen so many different interpretations of what an accurate poll is. I’ve been in plenty of House races and Senate races where we can have a 30-year incumbent that’s beating their opponent 49 to 26 on the head-to-head and they lose 51 to 49 on Election Day because they basically had support that wasn’t maxed out and all the undecideds broke for the challenger. That’s a real scenario that I’ve seen happen. We have an incumbent 49 on the head-to-head and they’re sitting on a 25-point lead, but they lose 51 to 49. Was that an inaccurate poll? I’ve had legislators look at me and say, “You have me up by 25 points.” And I said, “Well, you’ve been in office 30 years. Yeah, you were winning 49 to 26, but you’re under 50%. If voters weren’t for you by now, they were never gonna be for you. All the undecideds went for the challenger.” I guess I have to push back just a little bit on what’s an accurate poll. Even if the results differ from Election Day outcomes, that doesn’t necessarily mean it was inaccurate. Of course, we strive to make sure our polling is an accurate snapshot of public opinion. If it’s two months from Election Day, obviously, things are subject to change. If I had to boil it all down, I would say some of the mechanics of the survey influence the integrity of the survey in terms of how tight the fielding window was – if it was spread out over too long a period of time. You could have fluctuations in public opinion over the course of that time, so you really aren’t getting an accurate snapshot of public opinion. So a condensed field time is important. And making sure the calls are done randomly is a very important factor in a survey. The construction of the questions is important because even how you phrase a ballot question in a telephone survey could influence the results of that particular question.

Christopher Borick: I think one of the biggest challenges right now to thinking about polls is expectations of what they tell us, either individually or on the aggregate. I think expectations of what polls tell us exceed what their abilities are. Polls are a great way to get a general sense of the contours of elections. Is it a fairly competitive race? Is it a strong advantage for one or is it overwhelming for somebody? You can take these broad conclusions from polls and feel pretty good about their ability. The idea and perception that polls can give you pinpoint precision predictions of outcomes is, in my mind, not very reasonable. You’re gonna be disappointed probably if that’s how you view polls. We’re doing inferential statistics – we’re taking from a small group and making predictions to big groups. Anytime you’re doing that, even when it’s not a moving target like elections, you’re going to have some degree of error built in.

What is the best way to determine a good poll from a bad poll? Are there any obvious signs that differentiate the two?

Berwood Yost: I want to be careful saying “accurate” because there's a lot of different ways to ask questions, and a result can be accurate given the way the question is asked. I think we want to be careful to make sure that we don’t think the polls can do too much. That said, I think the simplest thing to understand is to take a look at the questions. Are those questions questions that you yourself could answer today? Do they make sense? Are they easy to understand? Are they clear? Were they written to provide all the possible alternatives? Are the response categories provided exhaustive and exclusive? If I’m going to focus on one thing to understand the quality of a poll, I want to understand the questions that were asked – and how the sample was drawn. Those two things tell me a good bit.

Jim Lee: There’s two ways to do that. The first would be – does it pass the smell test? Did the polling firm release all the mechanics of the survey? That’s step one. Step two would be: do the results seem to be an outlier or do they seem to line up with the average lead for whatever candidate it was based on everything that's been publicly profiled by RealClearPolitics. Real Clear Politics, which I rely on personally, they aggregate all the surveys across the whole country, and if a poll comes out that purports to fill a margin between the candidates that's substantially different from the average lead, then maybe it is an outlier. It could be right, but again, that’s the only way to really evaluate it, which is to evaluate it in relation to the averages posted on a reputable website that's tracking public polling in a host of states.

Christopher Borick: There’s different ways to look at it. How close is, for example, the final percentages that candidates get to the estimates in the polls? If you’re saying a candidate is going to get 51%, you might say, “How far off are they from those?” So that’s one way of doing it. Then you can also look at the margin – what was the margin? Say I have Candidate A getting 45% of the vote and Candidate B getting 40% of the vote. So that's a 5-point margin, but I'm pretty far off in terms of their actual percentages at the end – maybe one gets 53 and the other gets 48. You say, “Wow, your estimates are pretty far off, but your margin (is) not that far off. Your margin was a 5-point margin and in the end it was a 5-point margin. So, margin matters. Estimates of the actual numbers are something we might evaluate at the end to see how close they are.

If there’s one thing voters should take away from this conversion about political polling, what would you want that to be?

Berwood Yost: Less focus on the horse race and more focus on the context. We ask a lot of questions: What are the issues of importance to the voters? What’s helping drive their choices? How do they feel about the various candidates? How do they feel about the performance of the president? How do they feel about their own personal well-being? There’s a whole host of things that can be really useful to understand that get well beyond the horse race. Given what I said earlier about polls not being precision instruments and that we need to be careful about that, the fundamentals of the race are less likely to change than is the horse race – if that makes sense. Let’s face it, campaigns are designed to move voters, at least some voters. They’re designed to encourage the partisans to get out, and they’re designed to attract at least some independent voters and maybe even weakly attached partisans from the other party. Campaigns by their nature are designed to change or influence that horse race question. They may try to influence other things, but I think it’s important to understand the fundamentals that are going to guide the vote. That’s really what to take away from the polls.

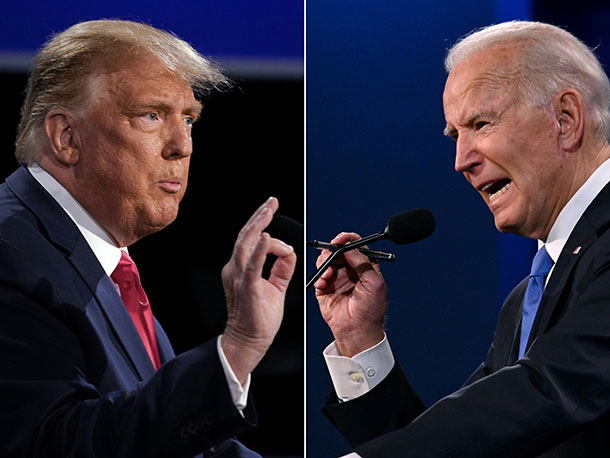

Jim Lee: At a time when our industry is under siege – because there's a perception that polling is getting worse, that the accuracy is getting worse and that our results aren’t to be trusted – that there are firms out there that care about the reputation of the industry and are doing their level best to make their polling accurate, regardless of which political party it tends to benefit. We believe the industry has a long future ahead, but that there are people in this industry that are making us all look bad by putting out polling that really doesn't pass the smell test and really doesn't have the integrity it should have because there was no lack of information about the mechanics of the survey. Because of that work, we're getting a bad rap. I was profiled on “Inside Edition” because so many polling firms really got it wrong in terms of the Biden/Trump numbers in a lot of the battleground states. We were cited as a firm that had it close in a lot of these contested states, and it was important to me that we did that interview because I've been saying for years now – I care about our industry and we're getting a black eye because there's some bad actors out there, but there's some good ones too.

Christopher Borick: I think people that are doing polling in the public light are seeking to provide some insight for the public into important facets of life – elections, policy concerns, issues. There’s lots of methodological challenges in doing that. Sometimes, those challenges can cause some problems as we make our estimates and do things. Ultimately, the ability to understand what the public thinks is a really valuable component of the democratic system. I think election polls … they’re the ones I'm least ultimately least interested in to be honest. Yeah, they tell us stories, and they tell us where races are, and everybody cares about the score. They want the score. I like those issue polls. I like post-election polls to understand what went on and how it came about so we have a better, improved understanding. I would say to folks, certainly keep your attention on election polls because people are interested, but there’s much more out there that tells us even more about what’s going on.

NEXT STORY: Ask not for whom the bell polls